Technology

From Idea to Impact - How Machine Learning Models Go Live

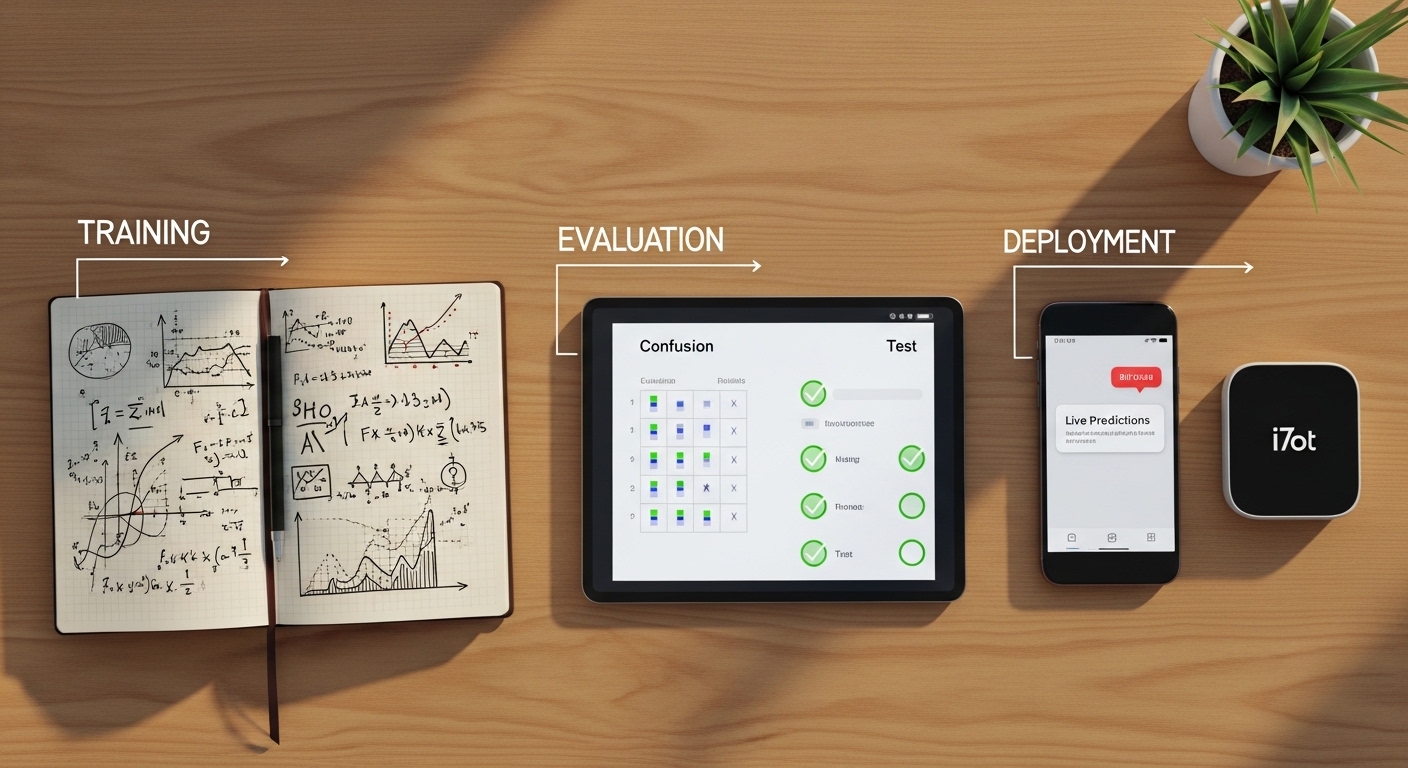

Machine learning models don’t go straight from cool idea to real‑world impact. They move through three big stages: training, evaluation, and deployment. You can think of this as teaching, testing, and then trusting a model enough to let it help in real applications. Understanding this journey without too much jargon makes it much easier to see what’s happening behind many AI systems you use every day.

Teaching the Model: Training

Model training is where everything starts. First, you gather data that represents the problem you want to solve: maybe past customer purchases, medical records, images, or text messages. If you’re doing supervised learning, each example usually comes with a correct answer, such as “spam” or “not spam,” “clicked” or “not clicked,” or a real number like a house price.

Before the model can learn, the data has to be cleaned and prepared. This often means fixing missing values, removing obvious errors, and turning messy, real‑world information (like dates, categories, or raw text) into numerical features a model can understand. Then the dataset is usually split into three parts: one for training, one for validation, and one for final testing.

During training, the model repeatedly sees the training data and adjusts its internal parameters to reduce its mistakes. With each pass through the data, it slowly gets better at connecting inputs such as features of a house to outputs such as its price. Hyperparameters settings chosen by the developer, like learning rate or tree depth control how this learning process behaves. These are tuned using the validation set, which acts like a mini exam to see which settings work best without touching the final test data.

Testing the Model: Evaluation

Evaluation is all about checking how trustworthy a model really is. To do this fairly, you use the test set data the model has never seen before. This step simulates what will happen once the model faces real users and new situations.

How performance is measured depends on the task. For classification problems, such as spam detection or disease prediction, accuracy shows how often the model is correct overall, but other metrics like precision and recall become very important when mistakes have different costs. For example, in medical diagnosis, missing a positive case can be much more serious than wrongly flagging a healthy person. For regression problems, where the goal is to predict numbers like sales or prices, error measures such as Mean Squared Error or Mean Absolute Error show how far the predictions are from the true values on average.

A crucial part of evaluation is checking for overfitting. If a model performs extremely well on training data but much worse on test data, it has probably memorized specific examples instead of learning general patterns. Evaluation is also where different models are compared, and where fairness and robustness are examined for example, checking whether performance is consistent across different age groups, locations, or device types. Only when a model passes these checks does it become a serious candidate for deployment.

Putting the Model to Work: Deployment

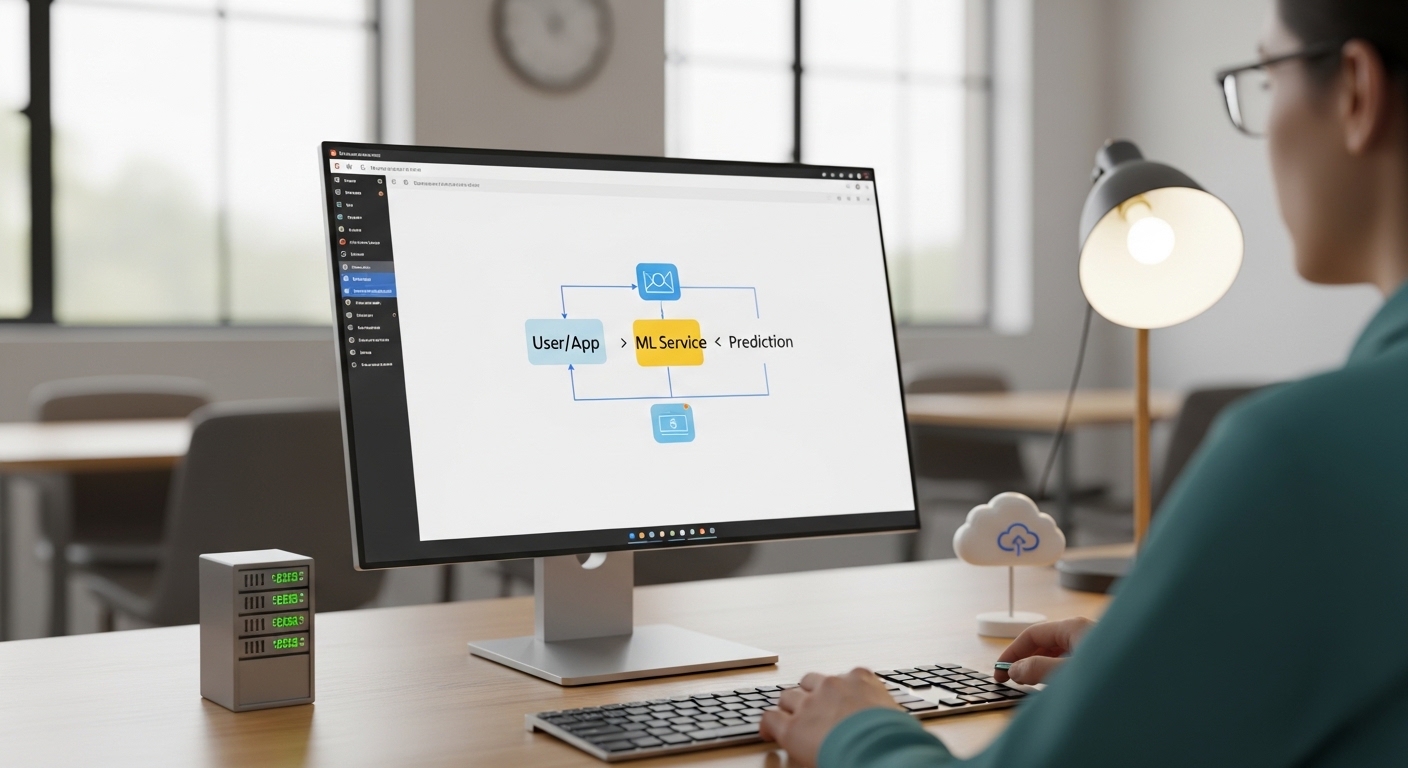

Deployment is the step where the model leaves the comfort of notebooks and development environments and starts helping real systems and real people. In many cases, the model is wrapped inside an API so that applications like a website, mobile app, or internal tool can send it data and receive predictions in return. For example, an e‑commerce site might send user behavior data to a recommendation model and then show the predicted “You may also like” products on the page.

Sometimes models are deployed as batch jobs that run on a schedule, for instance, scoring all customers every night for credit risk or churn likelihood. In other cases, they run directly on devices, like phones or IoT sensors, where low latency and offline capability are important. No matter how it’s deployed, the goal is the same: integrate the model smoothly into a workflow so that predictions arrive at the right time and place to be useful.

One Connected Lifecycle

Model training, evaluation, and deployment are not isolated steps; together they form a continuous loop. Data is collected, a model is trained, its performance is evaluated, and then it is deployed and monitored. Feedback from the real world leads to new data, updated models, and better versions over time.

Even if you never build a model yourself, knowing this lifecycle helps you ask better questions about any AI system: How was it trained? How was it tested? How is it monitored and updated? Those questions sit at the heart of using machine learning in a way that is not only powerful, but also safe, fair, and trustworthy.

Test Your Knowledge!

Click the button below to generate an AI-powered quiz based on this article.

Did you enjoy this article?

Show your appreciation by giving it a like!

Conversation (0)

Cite This Article

Generating...