Technology

Turning Raw Data into Something a Model Can Actually Use

Behind every impressive AI model is something far less glamorous but absolutely essential: good data. Not just lots of data useful data. That’s where feature engineering and preprocessing come in. If you imagine the model as a chef, raw data is the unwashed vegetables and random ingredients you dump on the counter. Preprocessing is washing and cutting; feature engineering is deciding what dish you’re going to make and how to combine the ingredients so the result actually tastes good.

The Messy Truth About Real‑World Data

In theory, data looks clean and organized in tidy tables. In reality, it’s full of missing ages in a health dataset, strange house sizes in a housing file, and inconsistent product names in customer logs. If you feed that straight into a model, you’re basically asking it to learn from chaos.

So most ML projects quietly start with a lot of cleaning, fixing impossible values like a negative house price, filling in missing blood pressure readings in medical data, and making sure “NY”, “New York”, and “NewYork” don’t all appear as different cities in customer records. It doesn’t feel like AI magic yet but without this step, there is no magic later.

Translating the World into Numbers

Models are picky, they mainly understand numbers. Humans, on the other hand, write things like Colombo, Hypertension, and Gold tier customer. Bridging that gap is a big part of preprocessing.

A column like “City” in a housing or customer dataset isn’t just a string, it might become multiple binary flags. A “Date of visit” in a hospital system can be split into year, month, weekday, or “is weekend” to capture patterns in patient flow. Free‑text fields like review comments or doctor notes are turned into numeric representations so models can learn from them too.

None of this changes what the data means to us but it changes how clearly the model can see structure in it. In a way, you’re rewriting the story of the data in a language the algorithm can read.

When Scale Starts to Matter

Imagine a table where one column is age (0–100), another is house price up to millions, and another is monthly spend for a customer. For many algorithms, huge numbers tend to dominate smaller ones, even if the smaller ones are more important.

That’s why we scale and normalize. After scaling, a patient’s age, a house’s area, and a shopper’s average basket value all speak to the model at a similar volume instead of one feature shouting while others whisper. This makes training more stable and often more accurate, especially for linear models and neural networks.

Feature Engineering - Where Creativity Meets Data

If preprocessing is cleaning and organizing, feature engineering is the fun, creative part: deciding what new signals might help the model see the problem more clearly.

- In a housing model, you might create price per square foot or age of house from year built, instead of using only raw size and year.

- In a customer‑churn model, you might calculate average orders per month, time since last purchase, or number of product categories visited from raw click and transaction logs.

- In a health model, you might combine multiple lab values into risk scores, or derive BMI from height and weight, giving the model more direct clues about a patient’s condition.

These new features come from domain knowledge and curiosity, What actually drives this outcome in the real world? Very often, a simple model with thoughtful features for houses, customers, or patients will outperform a complex model trained on raw, unshaped data.

Focusing on What Really Matters

Not every column deserves to stay. Some are noisy, redundant, or simply irrelevant. A random ID number, for example, rarely helps a model; neither does a field that is the same for almost everyone in the dataset.

Feature selection and dimensionality reduction help strip away these distractions. In a housing dataset, you might drop listing ID in a health dataset, you might remove highly duplicated or unstable measurements; in a customer dataset, you might focus on behavior features rather than one‑off internal codes. The goal is to leave the model with signals, not static.

One Pipeline to Handle It All

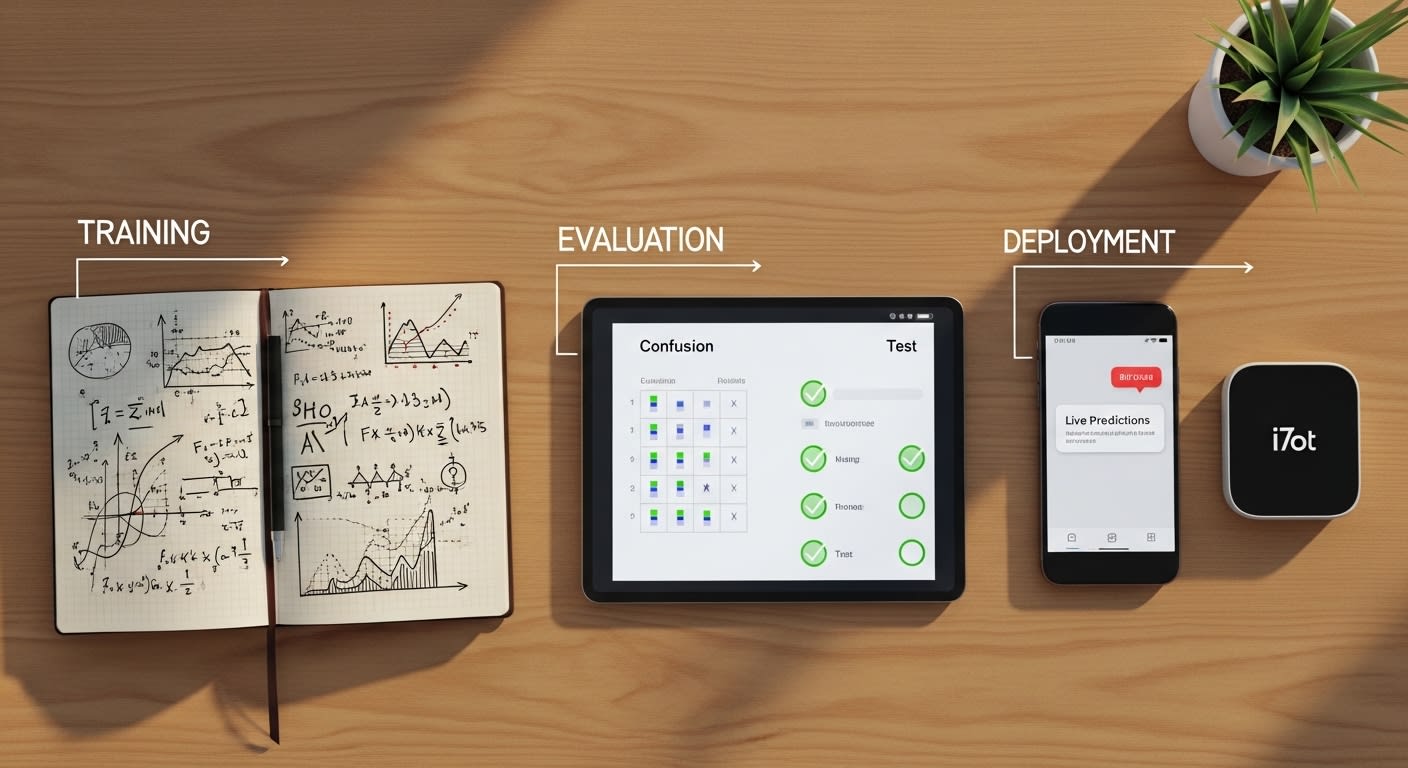

In practice, all of this becomes a pipeline:

- Take raw data like houses, customers, patients.

- Clean and standardize it.

- Transform it as encode categories, scale numbers, engineer new features.

- Feed it to the model for training and, later, for predictions.

When new house listings, new transactions, or new patient records arrive, they must pass through the same pipeline so the model sees consistent input over time. This is why many real systems deploy preprocessing and feature engineering right alongside the model itself.

Feature engineering and preprocessing may not be as flashy as deep neural networks, but they quietly decide how far your model can go. A well‑designed feature set for housing prices, a smart representation of customer habits, or a carefully cleaned health dataset often makes more difference than switching to the newest algorithm.

So the next time a machine learning system impresses you, remember long before the model started learning, someone spent time understanding the data, shaping it, and giving the model the right clues. That’s where much of the real intelligence begins.

Test Your Knowledge!

Click the button below to generate an AI-powered quiz based on this article.

Did you enjoy this article?

Show your appreciation by giving it a like!

Conversation (0)

Cite This Article

Generating...